Ch8-1 离屏渲染

本节的main.cpp对应示例代码中的:Ch8-1.hpp

我们先前始终是将内容直接渲染到屏幕,即交换链图像的。

离屏渲染(offscreen rendering)指将图片渲染到交换链图像之外的图像上,意义在于:

1.可以之后通过采样(需要后处理或旋转图像等情况)或blit(只是显示画中画的情况)进行一定的处理之后再呈现到交换链图像

2.可以将渲染结果用作程序运行过程中长期使用的贴图

3.程序可能没有交换链(比如只用来生成图像并保存到文件的控制台程序)

4.需要绘制的内容被保留到后续(当前帧交换链图像的内容当然也可以保留到下一帧,但是因为交换链图像有复数张,处理起来比较麻烦)

离屏渲染的流程

在你已经写过了Ch7代码的基础上,进行离屏渲染并将结果采样到屏幕的步骤如下:

1.创建图像附件

2.创建离屏渲染的渲染通道和帧缓冲

3.为离屏渲染书写着色器并创建管线(可以接着沿用画三角形的,不过干脆换个别的吧!)

4.为屏幕渲染书写着色器并创建管线(可以接着沿用...但换个别的!)

5.分配描述符集(描述符布局在上一步中创建),将图像附件的view写入描述符

6.渲染到离屏帧缓冲

7.采样贴图,渲染到交换链图像

我打算让上述的最后两步“离屏渲染”和“渲染到屏幕”发生在一个命令缓冲区中,中间不使用信号量或栅栏等同步对象。

“离屏渲染”和“渲染到屏幕”的两个渲染通道可以变成同一个渲染通道的两个子通道,不过这一节姑且就不这么做了。

图像附件

先前我们都是使用交换链图像的image view作为图像附件。从头创建图像附件,必然要先创建图像,然后再为其创建view。

在VKBase+.h中,vulkan命名空间中定义类attachment:

class attachment { protected: imageView imageView; imageMemory imageMemory; //-------------------- attachment() = default; public: //Getter VkImageView ImageView() const { return imageView; } VkImage Image() const { return imageMemory.Image(); } const VkImageView* AddressOfImageView() const { return imageView.Address(); } const VkImage* AddressOfImage() const { return imageMemory.AddressOfImage(); } //Const Function //该函数返回写入描述符时需要的信息 VkDescriptorImageInfo DescriptorImageInfo(VkSampler sampler) const { return { sampler, imageView, VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL }; } };

然后派生出两个类:颜色附件colorAttachment、深度模板附件depthStencilAttachment(这一节还用不着深度模板附件,算是为之后做准备)。

输入附件不需要封装,输入附件是被后续子通道读取的图像附件,在创建颜色/深度模板附件时注明VK_IMAGE_USAGE_INPUT_ATTACHMENT_BIT即可。至于解析附件只不过是采样点数为1的颜色/深度模板附件罢了。

class colorAttachment :public attachment { public: colorAttachment() = default; colorAttachment(VkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0) { Create(format, extent, layerCount, sampleCount, otherUsages); } //Non-const Function void Create(VkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0) { /*待填充*/ } //Static Function //该函数用于检查某一格式的图像可否被用作颜色附件 static bool FormatAvailability(VkFormat format, bool supportBlending = true) { /*待填充*/ } }; class depthStencilAttachment :public attachment { public: depthStencilAttachment() = default; depthStencilAttachment(VkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0, bool stencilOnly = false) { Create(format, extent, layerCount, sampleCount, otherUsages, stencilOnly); } //Non-const Function void CreateVkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0, bool stencilOnly = false) { /*待填充*/ } //Static Function //该函数用于检查某一格式的图像可否被用作深度模板附件 static bool FormatAvailability(VkFormat format) { /*待填充*/ } };

于是先来填充两个FormatAvailability(...)。VkFormatProperties的成员optimalTilingFeatures和linearTilingFeatures分别代表图像数据的排列方式(VkImageCreateInfo::tiling)为最优排列和线性排列时,某种格式的图像具有的特性。

出于以下理由,不关心线性排列时的格式特性:

1.独显对线性数据排列的渲染目标支持较差,可能完全不支持。

2.总是倾向于使用最优数据排列以获得最佳性能。

先前在Ch5-0 VKBase+.h中获取了所有格式的VkFormatProperties,现在用先前准备好的函数FormatProperties(...)获取格式属性,让其optimalTilingFeatures成员与相应的VkImageUsageFlagBits枚举项做位与,即可知某种格式的图像能否用作颜色/深度模板附件:

static colorAttachment::FormatAvailability(VkFormat format, bool supportBlending = true) { return FormatProperties(format).optimalTilingFeatures & VK_FORMAT_FEATURE_COLOR_ATTACHMENT_BIT << uint32_t(supportBlending); } bool depthStencilAttachment::FormatAvailability(VkFormat format) { return FormatProperties(format).optimalTilingFeatures & VK_FORMAT_FEATURE_DEPTH_STENCIL_ATTACHMENT_BIT; }

-

VK_FORMAT_FEATURE_COLOR_ATTACHMENT_BIT左移1位得到VK_FORMAT_FEATURE_COLOR_ATTACHMENT_BLEND_BIT,表示支持作为图像附件且支持混色。

然后填充colorAttachment::Create(...):

void colorAttachment::Create(VkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0) { VkImageCreateInfo imageCreateInfo = { .imageType = VK_IMAGE_TYPE_2D, .format = format, .extent = { extent.width, extent.height, 1 }, .mipLevels = 1, .arrayLayers = layerCount, .samples = sampleCount, .usage = VK_IMAGE_USAGE_COLOR_ATTACHMENT_BIT | otherUsages }; imageMemory.Create( imageCreateInfo, VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT | bool(otherUsages & VK_IMAGE_USAGE_TRANSIENT_ATTACHMENT_BIT) * VK_MEMORY_PROPERTY_LAZILY_ALLOCATED_BIT); imageView.Create( imageMemory.Image(), layerCount > 1 ? VK_IMAGE_VIEW_TYPE_2D_ARRAY : VK_IMAGE_VIEW_TYPE_2D, format, { VK_IMAGE_ASPECT_COLOR_BIT, 0, 1, 0, layerCount }); }

-

内存属性当然得有VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT以获得最佳性能,除此之外根据惰性分配的规定,如果图像用途包含VK_IMAGE_USAGE_TRANSIENT_ATTACHMENT_BIT,内存属性必须具有VK_MEMORY_PROPERTY_LAZILY_ALLOCATED_BIT。

-

图像视图的类型根据图层数进行了分支,但如果你之后打算把渲染好的图像作为单层2D贴图数组(而非普通2D贴图)采样,在图层数为1时将类型指定为VK_IMAGE_VIEW_TYPE_2D_ARRAY也没什么问题(前提是设备得支持多层帧缓冲)。

相比之下,depthStencilAttachment::Create(...)多出一段根据图像格式确定VkImageSubresourceRange::aspectMask的逻辑:

void depthStencilAttachment::Create(VkFormat format, VkExtent2D extent, uint32_t layerCount = 1, VkSampleCountFlagBits sampleCount = VK_SAMPLE_COUNT_1_BIT, VkImageUsageFlags otherUsages = 0, bool stencilOnly = false) { VkImageCreateInfo imageCreateInfo = { .imageType = VK_IMAGE_TYPE_2D, .format = format, .extent = { extent.width, extent.height, 1 }, .mipLevels = 1, .arrayLayers = layerCount, .samples = sampleCount, .usage = VK_IMAGE_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT | otherUsages }; imageMemory.Create( imageCreateInfo, VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT | bool(otherUsages & VK_IMAGE_USAGE_TRANSIENT_ATTACHMENT_BIT) * VK_MEMORY_PROPERTY_LAZILY_ALLOCATED_BIT); //确定aspcet mask------------------------- VkImageAspectFlags aspectMask = (!stencilOnly) * VK_IMAGE_ASPECT_DEPTH_BIT; if (format > VK_FORMAT_S8_UINT) aspectMask |= VK_IMAGE_ASPECT_STENCIL_BIT; else if (format == VK_FORMAT_S8_UINT) aspectMask = VK_IMAGE_ASPECT_STENCIL_BIT; //---------------------------------------- imageView.Create( imageMemory.Image(), layerCount > 1 ? VK_IMAGE_VIEW_TYPE_2D_ARRAY : VK_IMAGE_VIEW_TYPE_2D, format, { aspectMask, 0, 1, 0, layerCount }); }

-

这里if-else分支的逻辑是:在仅有的几种深度模板格式当中,只有VK_FORMAT_S8_UINT是只存储模板值的,而大于VK_FORMAT_S8_UINT的深度模板格式同时具有深度和模板值。

-

格式中同时包含深度和模板分量,之后只使用深度测试/模板测试之一的话,aspectMask中可同时包含两者,但只指定其中之一也是没问题的。

但是用作输入附件或贴图时,必须只指定VK_IMAGE_ASPECT_DEPTH_BIT或VK_IMAGE_ASPECT_STENCIL_BIT。

stencilOnly参数的解释:

你只打算使用深度测试的话,使用只有深度的格式即可,Vulkan标准规定实现必须支持VK_FORMAT_D16_UNORM格式的深度模板附件,然后VK_FORMAT_X8_D24_UNORM_PACK32(X8是填充字节)和VK_FORMAT_D32_SFLOAT之中至少有一个受支持。

但是,只打算使用模板测试时,实现不一定支持VK_FORMAT_S8_UINT格式的深度模板附件,可能不得不使用带深度值的格式,这里stencilOnly意在让格式中带深度分量时使aspectMask中不标明深度(以方便需要采样模板附件的情况)。

CreateRpwf_Canvas

于是,向EasyVulkan.hpp,easyVulkan命名空间中,定义renderPassWithFramebuffer结构体,及用来创建图像附件、渲染通道、帧缓冲的函数CreateRpwf_Canvas(...):

namespace easyVulkan { struct renderPassWithFramebuffer { renderPass renderPass; framebuffer framebuffer; }; const auto& CreateRpwf_Canvas(VkExtent2D canvasSize) { static renderPassWithFramebuffer rpwf; /*待后续填充*/ return rpwf; } }

所谓canvas,顾名思义,我打算将这次创建的图像附件用作画布,实现一个超简陋的画画功能,画布大小从CreateRpwf_Canvas(...)的参数传入。

在easyVulkan命名空间中定义一个colorAttachment对象,然后在CreateRpwf_Canvas(...)中创建图像附件,格式就跟交换链一样吧:

namespace easyVulkan { struct renderPassWithFramebuffer { renderPass renderPass; framebuffer framebuffer; }; colorAttachment ca_canvas; const auto& CreateRpwf_Canvas(VkExtent2D canvasSize) { static renderPassWithFramebuffer rpwf; ca_canvas.Create(graphicsBase::Base().SwapchainCreateInfo().imageFormat, canvasSize, 1, VK_SAMPLE_COUNT_1_BIT, VK_IMAGE_USAGE_SAMPLED_BIT | VK_IMAGE_USAGE_TRANSFER_DST_BIT); /*待后续填充*/ return rpwf; } }

-

将离屏渲染的图像附件用作画布的话,渲染通道开始时当然不会清屏,那么图像用途中应包含VK_IMAGE_USAGE_TRANSFER_DST_BIT,以使用vkCmdClearColorImage(...)清屏。

来创建渲染通道,先填写附件描述。

因为是画布,每次读取时不清屏,且要保留先前的内容,所以loadOp为VK_ATTACHMENT_LOAD_OP_LOAD,storeOp为VK_ATTACHMENT_STORE_OP_STORE。

这次的应用场景中,图像附件是“渲染→被采样→渲染→被采样”如此循环,所以前后内存布局都用VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL即可。

const auto& CreateRpwf_Canvas(VkExtent2D canvasSize) { static renderPassWithFramebuffer rpwf; ca_canvas.Create(graphicsBase::Base().SwapchainCreateInfo().imageFormat, canvasSize, 1, VK_SAMPLE_COUNT_1_BIT, VK_IMAGE_USAGE_SAMPLED_BIT | VK_IMAGE_USAGE_TRANSFER_DST_BIT); VkAttachmentDescription attachmentDescription = { .format = graphicsBase::Base().SwapchainCreateInfo().imageFormat, .samples = VK_SAMPLE_COUNT_1_BIT, .loadOp = VK_ATTACHMENT_LOAD_OP_LOAD, .storeOp = VK_ATTACHMENT_STORE_OP_STORE, .stencilLoadOp = VK_ATTACHMENT_LOAD_OP_DONT_CARE, .stencilStoreOp = VK_ATTACHMENT_LOAD_OP_DONT_CARE, .initialLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL, .finalLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL }; /*待后续填充*/ return rpwf; }

毫无难度地填写掉子通道描述,并把渲染通道创建信息先填了:

VkAttachmentReference attachmentReference = { 0, VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL }; VkSubpassDescription subpassDescription = { .pipelineBindPoint = VK_PIPELINE_BIND_POINT_GRAPHICS, .colorAttachmentCount = 1, .pColorAttachments = &attachmentReference }; VkRenderPassCreateInfo renderPassCreateInfo = { .attachmentCount = 1, .pAttachments = &attachmentDescription, .subpassCount = 1, .pSubpasses = &subpassDescription, .dependencyCount = 2, .pDependencies = subpassDependencies, };

子通道依赖是子通道开始和结束时各一个,先看代码:

VkSubpassDependency subpassDependencies[2] = { { .srcSubpass = VK_SUBPASS_EXTERNAL, .dstSubpass = 0, .srcStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .dstStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .srcAccessMask = 0, .dstAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT }, { .srcSubpass = 0, .dstSubpass = VK_SUBPASS_EXTERNAL, .srcStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .dstStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .srcAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dstAccessMask = VK_ACCESS_SHADER_READ_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT } };

先来解说结束时的情况,结束时的子通道依赖可以说是每个参数都有恰如其分的作用。

通道结束时的dstSubpass为VK_SUBPASS_EXTERNAL,这里实质上指代之后“渲染到屏幕”。

srcStageMask和srcAccessMask应该不需要解释,采样属于VK_ACCESS_SHADER_READ_BIT,发生在片段着色器阶段所以dstStageMask是VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT。

渲染通道开始时的情况则与结束时相反即可。

srcAccessMask为0,指定VK_ACCESS_SHADER_READ_BIT这种读操作无意义,而前一帧中对该图像附件的写入结果在当前帧的可见性,由在前一帧中等待semaphore_renderingIsOver来确保。

指定srcStageMask为VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT是多余的,因为有栅栏来同步,填写VK_PIPELINE_STAGE_TOP_OF_PIPE_BIT即可(你问我为什么每次都不这么写?为了特地反复强调这件事!)。

VkSubpassDependency subpassDependencies[2] = { { .srcSubpass = VK_SUBPASS_EXTERNAL, .dstSubpass = 0, .srcStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .dstStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .srcAccessMask = 0, .dstAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT }, { .srcSubpass = 0, .dstSubpass = VK_SUBPASS_EXTERNAL, .srcStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .dstStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .srcAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dstAccessMask = VK_ACCESS_SHADER_READ_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT } };

最后非常简单无脑地创建帧缓冲,整个CreateRpwf_Canvas(...)如下:

const auto& CreateRpwf_Canvas(VkExtent2D canvasSize = windowSize) { static renderPassWithFramebuffer rpwf; ca_canvas.Create(graphicsBase::Base().SwapchainCreateInfo().imageFormat, canvasSize, 1, VK_SAMPLE_COUNT_1_BIT, VK_IMAGE_USAGE_SAMPLED_BIT | VK_IMAGE_USAGE_TRANSFER_DST_BIT); VkAttachmentDescription attachmentDescription = { .format = graphicsBase::Base().SwapchainCreateInfo().imageFormat, .samples = VK_SAMPLE_COUNT_1_BIT, .loadOp = VK_ATTACHMENT_LOAD_OP_LOAD, .storeOp = VK_ATTACHMENT_STORE_OP_STORE, .stencilLoadOp = VK_ATTACHMENT_LOAD_OP_DONT_CARE, .stencilStoreOp = VK_ATTACHMENT_LOAD_OP_DONT_CARE, .initialLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL, .finalLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL }; VkSubpassDependency subpassDependencies[2] = { { .srcSubpass = VK_SUBPASS_EXTERNAL, .dstSubpass = 0, .srcStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .dstStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .srcAccessMask = 0, .dstAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT }, { .srcSubpass = 0, .dstSubpass = VK_SUBPASS_EXTERNAL, .srcStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, .dstStageMask = VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, .srcAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT, .dstAccessMask = VK_ACCESS_SHADER_READ_BIT, .dependencyFlags = VK_DEPENDENCY_BY_REGION_BIT } }; VkAttachmentReference attachmentReference = { 0, VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL }; VkSubpassDescription subpassDescription = { .pipelineBindPoint = VK_PIPELINE_BIND_POINT_GRAPHICS, .colorAttachmentCount = 1, .pColorAttachments = &attachmentReference }; VkRenderPassCreateInfo renderPassCreateInfo = { .attachmentCount = 1, .pAttachments = &attachmentDescription, .subpassCount = 1, .pSubpasses = &subpassDescription, .dependencyCount = 2, .pDependencies = subpassDependencies, }; VkFramebufferCreateInfo framebufferCreateInfo = { .renderPass = rpwf.renderPass, .attachmentCount = 1, .pAttachments = ca_canvas.AddressOfImageView(), .width = canvasSize.width, .height = canvasSize.height, .layers = 1 }; rpwf.framebuffer.Create(framebufferCreateInfo); return rpwf; }

来画线吧!

我打算实现一个非常简单的绘画功能:追纵鼠标指针的位置,将鼠标指针在当前帧和上一帧的位置用红线连起来。

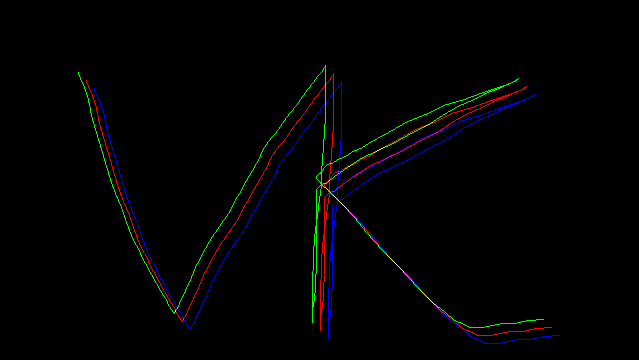

然后在“渲染到屏幕时”使用两两间隔相同的三个uv坐标,采用三次,使其显示为红绿蓝三色的线,并让颜色叠加在一起,效果如下:

(我知道不怎么有趣,不过我不想写一些数学上解释起来很麻烦的)

为简化叙事,这节会全部使用push constant来向着色器提供数据,免去顶点/uniform等缓冲区。

画线的着色器

用来画线的顶点着色器Line.vert.shader:

#version 460 #pragma shader_stage(vertex) layout(push_constant) uniform pushConstants { vec2 viewportSize; vec2 offsets[2]; }; void main() { gl_Position = vec4(2 * offsets[gl_VertexIndex] / viewportSize - 1, 0, 1); }

-

这里viewportSize是视口大小,将是等于画布大小。

offsets是两个点相对于视口左上角的位置。

视口对应整个NDC坐标[-1, 1]的区间,对于屏幕上任意点的像素单位坐标pos,到其在视口中NDC坐标范围[-1, 1]的转换为(pos - viewportSize的一半) / viewportSize的一半, 即2 * pos / viewportSize - 1

片段着色器Line.frag.shader(真是省事啊!):

#version 460 #pragma shader_stage(fragment) layout(location = 0) out vec4 o_Color; void main() { o_Color = vec4(1, 0, 0, 1); }

之后创建管线时的VkPushConstantRange:

VkPushConstantRange pushConstantRange = { VK_SHADER_STAGE_VERTEX_BIT, 0, 24 };

采样画布的着色器

CanvasToScreen.vert.shader:

#version 460 #pragma shader_stage(vertex) vec2 positions[4] = { { 0, 0 }, { 0, 1 }, { 1, 0 }, { 1, 1 } }; layout(location = 0) out vec2 o_TexCoord; layout(push_constant) uniform pushConstants { vec2 viewportSize; vec2 canvasSize; }; void main() { o_TexCoord = positions[gl_VertexIndex]; gl_Position = vec4(2 * positions[gl_VertexIndex] * canvasSize / viewportSize - 1, 0, 1); }

positions是着色器里的变量,我没有用const修饰它,所以它是可修改的,修改只到影响本次(处理当前顶点的)着色器调用中的数值。

这里viewportSize是视口大小,之后代码里“渲染到屏幕”的视口一如既往是交换链图像大小。

canvasSize是画布大小,数值跟前面Line.vert.shader中的viewportSize一致。

这个着色器的效果是贴着屏幕左上角渲染整张画布,因此四个顶点以像素为单位的位置是positions[gl_VertexIndex] * canvasSize,而贴图坐标非0即1。

CanvasToScreen.frag.shader:

#version 460 #pragma shader_stage(fragment) layout(location = 0) in vec2 i_TexCoord; layout(location = 0) out vec4 o_Color; layout(binding = 0) uniform sampler2D u_Texture; layout(push_constant) uniform pushConstants { layout(offset = 8) vec2 canvasSize; }; void main() { o_Color = texture(u_Texture, i_TexCoord); o_Color.g = texture(u_Texture, i_TexCoord + 8 / canvasSize).r; o_Color.b = texture(u_Texture, i_TexCoord - 8 / canvasSize).r; }

-

因为整张贴图大小是canvasSize,

i_TexCoord + 8 / canvasSize即从i_TexCoord纵向横向都偏离8个像素的采样位置。 -

这是除了对语法进行说明的Ch4-1 着色器模组外,本教程中第一次出现

layout(offset = 距起始位置的字节数)这个语法。

这个着色器不需要位于push constant开头8个字节的数据,如果你想把viewportSize声明上去,那也没什么关系(注:下面的VkPushConstantRange要相应变更)。

之后创建管线时的VkPushConstantRange:

VkPushConstantRange pushConstantRanges[2] = { { VK_SHADER_STAGE_VERTEX_BIT, 0, 16 }, { VK_SHADER_STAGE_FRAGMENT_BIT, 8, 8 } };

创建管线

跟管线相关的对象一共5个:

#include "GlfwGeneral.hpp" #include "EasyVulkan.hpp" using namespace vulkan; //离屏,画线 pipelineLayout pipelineLayout_line; pipeline pipeline_line; //屏幕,采样贴图 descriptorSetLayout descriptorSetLayout_texture; pipelineLayout pipelineLayout_screen; pipeline pipeline_screen; const auto& RenderPassAndFramebuffers_Screen() { static const auto& rpwf = easyVulkan::CreateRpwf_Screen(); return rpwf; } const auto& RenderPassAndFramebuffer_Offscreen(VkExtent2D canvasSize) { static const auto& rpwf = easyVulkan::CreateRpwf_Canvas(canvasSize); return rpwf; } void CreateLayout() { /*待填充*/ } void CreatePipeline(VkExtent2D canvasSize) { /*待填充*/ }

先创建“离屏”的管线布局,只有push constant范围:

void CreateLayout() { VkPushConstantRange pushConstantRange_offscreen = { VK_SHADER_STAGE_VERTEX_BIT, 0, 24 }; VkPipelineLayoutCreateInfo pipelineLayoutCreateInfo = { .pushConstantRangeCount = 1, .pPushConstantRanges = &pushConstantRange_offscreen, }; pipelineLayout_line.Create(pipelineLayoutCreateInfo); /*待填充*/ }

创建descriptorSetLayout_texture,同Ch7-7完全一样:

VkDescriptorSetLayoutBinding descriptorSetLayoutBinding_texture = { .binding = 0, .descriptorType = VK_DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, .descriptorCount = 1, .stageFlags = VK_SHADER_STAGE_FRAGMENT_BIT }; VkDescriptorSetLayoutCreateInfo descriptorSetLayoutCreateInfo_texture = { .bindingCount = 1, .pBindings = &descriptorSetLayoutBinding_texturePosition }; descriptorSetLayout_texture.Create(descriptorSetLayoutCreateInfo_texture);

剩下也没什么好说的,完事:

void CreateLayout() { //离屏 VkPushConstantRange pushConstantRange_offscreen = { VK_SHADER_STAGE_VERTEX_BIT, 0, 24 }; VkPipelineLayoutCreateInfo pipelineLayoutCreateInfo = { .pushConstantRangeCount = 1, .pPushConstantRanges = &pushConstantRange_offscreen, }; pipelineLayout_line.Create(pipelineLayoutCreateInfo); //屏幕 VkDescriptorSetLayoutBinding descriptorSetLayoutBinding_texture = { 0, VK_DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, 1, VK_SHADER_STAGE_FRAGMENT_BIT }; VkDescriptorSetLayoutCreateInfo descriptorSetLayoutCreateInfo_texture = { .bindingCount = 1, .pBindings = &descriptorSetLayoutBinding_texturePosition }; descriptorSetLayout_texture.Create(descriptorSetLayoutCreateInfo_texture); VkPushConstantRange pushConstantRanges_screen[] = { { VK_SHADER_STAGE_VERTEX_BIT, 0, 16 }, { VK_SHADER_STAGE_FRAGMENT_BIT, 8, 8 } }; pipelineLayoutCreateInfo.pushConstantRangeCount = 2; pipelineLayoutCreateInfo.pPushConstantRanges = pushConstantRanges_screen; pipelineLayoutCreateInfo.setLayoutCount = 1; pipelineLayoutCreateInfo.pSetLayouts = descriptorSetLayout_texture.Address(); pipelineLayout_screen.Create(pipelineLayoutCreateInfo); }

创建画线的管线,代码不需要放进lambda,因为窗口大小改变时,画布大小不必跟着变。

相比先前画三角形时的不同在于绘制模式和指定了线宽:

void CreateLayout() { static shaderModule vert_offscreen("shader/Line.vert.spv"); static shaderModule frag_offscreen("shader/Line.frag.spv"); VkPipelineShaderStageCreateInfo shaderStageCreateInfos_line[2] = { vert_offscreen.StageCreateInfo(VK_SHADER_STAGE_VERTEX_BIT), frag_offscreen.StageCreateInfo(VK_SHADER_STAGE_FRAGMENT_BIT) }; graphicsPipelineCreateInfoPack pipelineCiPack; pipelineCiPack.createInfo.layout = pipelineLayout_line; pipelineCiPack.createInfo.renderPass = RenderPassAndFramebuffer_Offscreen(canvasSize).renderPass; pipelineCiPack.inputAssemblyStateCi.topology = VK_PRIMITIVE_TOPOLOGY_LINE_LIST; //绘制线 pipelineCiPack.viewports.emplace_back(0.f, 0.f, float(canvasSize.width), float(canvasSize.height), 0.f, 1.f); pipelineCiPack.scissors.emplace_back(VkOffset2D{}, canvasSize); pipelineCiPack.rasterizationStateCi.lineWidth = 1; //线宽 pipelineCiPack.multisampleStateCi.rasterizationSamples = VK_SAMPLE_COUNT_1_BIT; pipelineCiPack.colorBlendAttachmentStates.push_back({ .colorWriteMask = 0b1111 }); pipelineCiPack.UpdateAllArrays(); pipelineCiPack.createInfo.stageCount = 2; pipelineCiPack.createInfo.pStages = shaderStageCreateInfos_line; pipeline_line.Create(pipelineCiPack); /*待填充*/ }

渲染到屏幕的管线,跟Ch2中创建画三角形管线的代码之差别只有绘制模式(不算文件路径和变量名的不同的话):

void CreateLayout() { //离屏 static shaderModule vert_offscreen("shader/Line.vert.spv"); static shaderModule frag_offscreen("shader/Line.frag.spv"); VkPipelineShaderStageCreateInfo shaderStageCreateInfos_line[2] = { vert_offscreen.StageCreateInfo(VK_SHADER_STAGE_VERTEX_BIT), frag_offscreen.StageCreateInfo(VK_SHADER_STAGE_FRAGMENT_BIT) }; graphicsPipelineCreateInfoPack pipelineCiPack; pipelineCiPack.createInfo.layout = pipelineLayout_line; pipelineCiPack.createInfo.renderPass = RenderPassAndFramebuffers_Screen(canvasSize).renderPass; pipelineCiPack.inputAssemblyStateCi.topology = VK_PRIMITIVE_TOPOLOGY_LINE_LIST; //绘制线 pipelineCiPack.viewports.emplace_back(0.f, 0.f, float(canvasSize.width), float(canvasSize.height), 0.f, 1.f); pipelineCiPack.scissors.emplace_back(VkOffset2D{}, canvasSize); pipelineCiPack.rasterizationStateCi.lineWidth = 1; //线宽 pipelineCiPack.multisampleStateCi.rasterizationSamples = VK_SAMPLE_COUNT_1_BIT; pipelineCiPack.colorBlendAttachmentStates.push_back({ .colorWriteMask = 0b1111 }); pipelineCiPack.UpdateAllArrays(); pipelineCiPack.createInfo.stageCount = 2; pipelineCiPack.createInfo.pStages = shaderStageCreateInfos_line; pipeline_line.Create(pipelineCiPack); //屏幕 static shaderModule vert_screen("shader/CanvasToScreen.vert.spv"); static shaderModule frag_screen("shader/CanvasToScreen.frag.spv"); static VkPipelineShaderStageCreateInfo shaderStageCreateInfos_screen[2] = { vert_screen.StageCreateInfo(VK_SHADER_STAGE_VERTEX_BIT), frag_screen.StageCreateInfo(VK_SHADER_STAGE_FRAGMENT_BIT) }; auto Create = [] { graphicsPipelineCreateInfoPack pipelineCiPack; pipelineCiPack.createInfo.layout = pipelineLayout_screen; pipelineCiPack.createInfo.renderPass = RenderPassAndFramebuffers_Screen().renderPass; pipelineCiPack.inputAssemblyStateCi.topology = VK_PRIMITIVE_TOPOLOGY_TRIANGLE_STRIP; //绘制模式跟Ch7-7一样 pipelineCiPack.viewports.emplace_back(0.f, 0.f, float(windowSize.width), float(windowSize.height), 0.f, 1.f); pipelineCiPack.scissors.emplace_back(VkOffset2D{}, windowSize); pipelineCiPack.multisampleStateCi.rasterizationSamples = VK_SAMPLE_COUNT_1_BIT; pipelineCiPack.colorBlendAttachmentStates.push_back({ .colorWriteMask = 0b1111 }); pipelineCiPack.UpdateAllArrays(); pipelineCiPack.createInfo.stageCount = 2; pipelineCiPack.createInfo.pStages = shaderStageCreateInfos_screen; pipeline_screen.Create(pipelineCiPack); }; auto Destroy = [] { pipeline_screen.~pipeline(); }; graphicsBase::Base().AddCallback_CreateSwapchain(Create); graphicsBase::Base().AddCallback_DestroySwapchain(Destroy); Create(); }

清空画布

在书写主函数前还有一步,需要写一个清空画布的函数。

不光是为了实现这个功能本身,刚才创建渲染通道时,图像附件的初始图像布局为VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL,可是刚创建好的图像附件的布局为VK_IMAGE_LAYOUT_UNDEFINED,内容也可能是一堆内存垃圾。

向EasyVulkan.hpp,easyVulkan命名空间中,定义函数CmdClearCanvas(...),用于清屏并转换图像布局:

void CmdClearCanvas(VkCommandBuffer commandBuffer, VkClearColorValue clearColor) { /*待填充*/ }

要干的事情一共三件:清屏前的内存屏障、调用vkCmdClearColorImage(...)清屏、清屏后的内存屏障。

vkCmdClearColorImage(...)属于数据转移命令,清屏前的内存屏障要将图像内存布局从VK_IMAGE_LAYOUT_UNDEFINED转到VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL待写:

void CmdClearCanvas(VkCommandBuffer commandBuffer, VkClearColorValue clearColor) { VkImageSubresourceRange imageSubresourceRange = { VK_IMAGE_ASPECT_COLOR_BIT, 0, 1, 0, 1 }; VkImageMemoryBarrier imageMemoryBarrier = { VK_STRUCTURE_TYPE_IMAGE_MEMORY_BARRIER, nullptr, 0, VK_ACCESS_TRANSFER_WRITE_BIT, VK_IMAGE_LAYOUT_UNDEFINED, VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL, VK_QUEUE_FAMILY_IGNORED, VK_QUEUE_FAMILY_IGNORED, ca_canvas.Image(), imageSubresourceRange }; vkCmdPipelineBarrier(commandBuffer, VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, VK_PIPELINE_STAGE_TRANSFER_BIT, 0, 0, nullptr, 0, nullptr, 1, &imageMemoryBarrier); /*待填充*/ }

-

在渲染循环中第二次执行本函数时,图像的内存布局已经是VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL了,但因为是清屏(不关心原有内容),清屏前的内存屏障中oldLayout永远都是VK_IMAGE_LAYOUT_UNDEFINED即可。

书写清屏后的内存屏障。

先前创建渲染通道时,指定了渲染通道开始时的图像内存布局为VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL,清屏后内存布局就转到这个,不要转到VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL。

因为之后有子通道依赖,dstStageMask就算填写成晚于VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT的阶段也没关系,内存布局转换具有隐式同步保证,这个屏障引发的转换一定先于渲染通道开始时的依赖:

void CmdClearCanvas(VkCommandBuffer commandBuffer, VkClearColorValue clearColor) { VkImageSubresourceRange imageSubresourceRange = { VK_IMAGE_ASPECT_COLOR_BIT, 0, 1, 0, 1 }; VkImageMemoryBarrier imageMemoryBarrier = { VK_STRUCTURE_TYPE_IMAGE_MEMORY_BARRIER, nullptr, 0, VK_ACCESS_TRANSFER_WRITE_BIT, VK_IMAGE_LAYOUT_UNDEFINED, VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL, VK_QUEUE_FAMILY_IGNORED, VK_QUEUE_FAMILY_IGNORED, ca_canvas.Image(), imageSubresourceRange }; vkCmdPipelineBarrier(commandBuffer, VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, VK_PIPELINE_STAGE_TRANSFER_BIT, 0, 0, nullptr, 0, nullptr, 1, &imageMemoryBarrier); /*调用命令清屏,待填充*/ imageMemoryBarrier.srcAccessMask = VK_ACCESS_TRANSFER_WRITE_BIT; imageMemoryBarrier.dstAccessMask = 0; imageMemoryBarrier.oldLayout = VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL; imageMemoryBarrier.newLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL; vkCmdPipelineBarrier(commandBuffer, VK_PIPELINE_STAGE_TRANSFER_BIT, VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, 0, 0, nullptr, 0, nullptr, 1, &imageMemoryBarrier); }

正题:

void VKAPI_CALL vkCmdClearColorImage(...) 的参数说明 |

|

|---|---|

VkCommandBuffer commandBuffer |

命令缓冲区的handle |

VkImage image |

图像的handle |

VkImageLayout imageLayout |

图像的内存布局 |

const VkClearColorValue* pColor |

指向用于清屏的填充色 |

uint32_t rangeCount |

清空范围的个数 |

const VkImageSubresourceRange* pRanges |

指向VkImageSubresourceRange的数组,用于指定清空范围 |

-

该函数只清空颜色图像,若要清空深度模板图像,使用vkCmdClearDepthStencilImage(...)。

清空整张图,毫无难度地写完:

void CmdClearCanvas(VkCommandBuffer commandBuffer, VkClearColorValue clearColor) { VkImageSubresourceRange imageSubresourceRange = { VK_IMAGE_ASPECT_COLOR_BIT, 0, 1, 0, 1 }; VkImageMemoryBarrier imageMemoryBarrier = { VK_STRUCTURE_TYPE_IMAGE_MEMORY_BARRIER, nullptr, 0, VK_ACCESS_TRANSFER_WRITE_BIT, VK_IMAGE_LAYOUT_UNDEFINED, VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL, VK_QUEUE_FAMILY_IGNORED, VK_QUEUE_FAMILY_IGNORED, ca_canvas.Image(), imageSubresourceRange }; vkCmdPipelineBarrier(commandBuffer, VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, VK_PIPELINE_STAGE_TRANSFER_BIT, 0, 0, nullptr, 0, nullptr, 1, &imageMemoryBarrier); vkCmdClearColorImage(commandBuffer, ca_canvas.Image(), VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL, &clearColor, 1, &imageSubresourceRange); imageMemoryBarrier.srcAccessMask = VK_ACCESS_TRANSFER_WRITE_BIT; imageMemoryBarrier.dstAccessMask = 0; imageMemoryBarrier.oldLayout = VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL; imageMemoryBarrier.newLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL; vkCmdPipelineBarrier(commandBuffer, VK_PIPELINE_STAGE_TRANSFER_BIT, VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT, 0, 0, nullptr, 0, nullptr, 1, &imageMemoryBarrier); }

录制渲染命令

主函数框架如下,都是你见过的东西。

在里面定义画布大小canvasSize,将图像附件的view写入描述符:

int main() { if (!InitializeWindow({ 1280, 720 })) return -1; //画布大小; VkExtent2D canvasSize = windowSize; const auto& [renderPass_screen, framebuffers_screen] = RenderPassAndFramebuffers_Screen(); const auto& [renderPass_offscreen, framebuffer_offscreen] = RenderPassAndFramebuffer_Offscreen(); CreateLayout(); CreatePipeline(); fence fence; semaphore semaphore_imageIsAvailable; semaphore semaphore_renderingIsOver; commandBuffer commandBuffer; commandPool commandPool(graphicsBase::Base().QueueFamilyIndex_Graphics(), VK_COMMAND_POOL_CREATE_RESET_COMMAND_BUFFER_BIT); commandPool.AllocateBuffers(commandBuffer); VkSamplerCreateInfo samplerCreateInfo = texture::SamplerCreateInfo(); sampler sampler(samplerCreateInfo); VkDescriptorPoolSize descriptorPoolSizes[] = { { VK_DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, 1 } }; descriptorPool descriptorPool descriptorPool(1, descriptorPoolSizes); descriptorSet descriptorSet_texture; descriptorPool.AllocateSets(descriptorSet_texture, descriptorSetLayout_texture); //写入描述符 descriptorSet_texture.Write(easyVulkan::ca_canvas.DescriptorImageInfo(sampler), VK_DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER); /*待填充*/ while (!glfwWindowShouldClose(pWindow)) { while (glfwGetWindowAttrib(pWindow, GLFW_ICONIFIED)) glfwWaitEvents(); graphicsBase::Base().SwapImage(semaphore_imageIsAvailable); auto i = graphicsBase::Base().CurrentImageIndex(); commandBuffer.Begin(VK_COMMAND_BUFFER_USAGE_ONE_TIME_SUBMIT_BIT); /*待填充*/ commandBuffer.End(); graphicsBase::Base().SubmitCommandBuffer_Graphics(commandBuffer, semaphore_imageIsAvailable, semaphore_renderingIsOver, fence); graphicsBase::Base().PresentImage(semaphore_renderingIsOver); glfwPollEvents(); /*操作逻辑,待填充*/ TitleFps(); fence.WaitAndReset(); } TerminateWindow(); return 0; }

-

因为每一帧中使用的是相同的离屏帧缓冲,不能应用即时帧(即每一帧应使用同一套同步对象)。

这里操作逻辑被我放到了glfwPollEvents()之后,嘛在循环里的话其实放哪儿都差不多,不过这里是有点讲究的。

我们首先将画布清空为全透明,因为不会每次都清屏,所以用一个初值为true的布尔值来指示是否清空:

int main() { /*...前略*/ /*待填充*/ bool clearCanvas = true; while (!glfwWindowShouldClose(pWindow)) { while (glfwGetWindowAttrib(pWindow, GLFW_ICONIFIED)) glfwWaitEvents(); graphicsBase::Base().SwapImage(semaphore_imageIsAvailable); auto i = graphicsBase::Base().CurrentImageIndex(); commandBuffer.Begin(VK_COMMAND_BUFFER_USAGE_ONE_TIME_SUBMIT_BIT); if (clearCanvas) easyVulkan::CmdClearCanvas(commandBuffer, VkClearColorValue{}), clearCanvas = false; /*待填充*/ commandBuffer.End(); graphicsBase::Base().SubmitCommandBuffer_Graphics(commandBuffer, semaphore_imageIsAvailable, semaphore_renderingIsOver, fence); graphicsBase::Base().PresentImage(semaphore_renderingIsOver); glfwPollEvents(); /*操作逻辑,待填充*/ TitleFps(); fence.WaitAndReset(); } TerminateWindow(); return 0; }

操作逻辑就简单点,按下左键就清空吧。

用glfwGetMouseButton(...)获取鼠标按键状态,参数不言自明,到GLFW3.4为止只返回GLFW_PRESS(按下,值为1)或GLFW_RELEASE(没按下,值为0)两种:

int main() { /*...前略*/ /*待填充*/ bool clearCanvas = true; while (!glfwWindowShouldClose(pWindow)) { while (glfwGetWindowAttrib(pWindow, GLFW_ICONIFIED)) glfwWaitEvents(); graphicsBase::Base().SwapImage(semaphore_imageIsAvailable); auto i = graphicsBase::Base().CurrentImageIndex(); commandBuffer.Begin(VK_COMMAND_BUFFER_USAGE_ONE_TIME_SUBMIT_BIT); if (clearCanvas) easyVulkan::CmdClearCanvas(commandBuffer, VkClearColorValue{}), clearCanvas = false; /*待填充*/ commandBuffer.End(); graphicsBase::Base().SubmitCommandBuffer_Graphics(commandBuffer, semaphore_imageIsAvailable, semaphore_renderingIsOver, fence); graphicsBase::Base().PresentImage(semaphore_renderingIsOver); glfwPollEvents(); /*待后续填充*/ clearCanvas = glfwGetMouseButton(pWindow, GLFW_MOUSE_BUTTON_LEFT) TitleFps(); fence.WaitAndReset(); } TerminateWindow(); return 0; }

-

所以操作逻辑放这个位置的原因也一目了然:防止clearCanvas在首次清空画布前变成false。

接着在渲染循环前准备“离屏渲染”用到的push constant数据。

用glfwGetCursorPos(...)获取鼠标指针位置,参数不言自明,得到的是相对于屏幕左上角的坐标,将其结果用作传入着色器的两个点坐标的初始值:

double mouseX, mouseY; glfwGetCursorPos(pWindow, &mouseX, &mouseY); struct { glm::vec2 viewportSize; glm::vec2 offsets[2]; } pushConstants_offscreen = { { canvasSize.width, canvasSize.height }, { { mouseX, mouseY }, { mouseX, mouseY } } };

之后也要不断获取鼠标指针位置,考虑到我们只有两个点,用布尔值作为索引,交替赋值就行了:

int main() { /*...前略*/ double mouseX, mouseY; glfwGetCursorPos(pWindow, &mouseX, &mouseY); struct { glm::vec2 viewportSize; glm::vec2 offsets[2]; } pushConstants_offscreen = { { canvasSize.width, canvasSize.height }, { { mouseX, mouseY }, { mouseX, mouseY } } }; bool clearCanvas = true; /*New*/ bool index = 0; while (!glfwWindowShouldClose(pWindow)) { /*...前略*/ glfwPollEvents(); /*New*/ glfwGetCursorPos(pWindow, &mouseX, &mouseY); /*New*/ pushConstants_offscreen.offsets[index = !index] = { mouseX, mouseY }; clearCanvas = glfwGetMouseButton(pWindow, GLFW_MOUSE_BUTTON_LEFT) } TerminateWindow(); return 0; }

操作逻辑到此写完,该写离屏部分的渲染代码了,一如既往:开始渲染通道、绑定管线、更新常量、绘制、结束渲染通道

int main() { /*...前略*/ double mouseX, mouseY; glfwGetCursorPos(pWindow, &mouseX, &mouseY); struct { glm::vec2 viewportSize; glm::vec2 offsets[2]; } pushConstants_offscreen = { { canvasSize.width, canvasSize.height }, { { mouseX, mouseY }, { mouseX, mouseY } } }; bool clearCanvas = true; bool index = 0; while (!glfwWindowShouldClose(pWindow)) { while (glfwGetWindowAttrib(pWindow, GLFW_ICONIFIED)) glfwWaitEvents(); graphicsBase::Base().SwapImage(semaphore_imageIsAvailable); auto i = graphicsBase::Base().CurrentImageIndex(); commandBuffer.Begin(VK_COMMAND_BUFFER_USAGE_ONE_TIME_SUBMIT_BIT); if (clearCanvas) easyVulkan::CmdClearCanvas(commandBuffer, VkClearColorValue{}), clearCanvas = false; //离屏 renderPass_offscreen.CmdBegin(commandBuffer, framebuffer_offscreen, { {}, canvasSize }); vkCmdBindPipeline(commandBuffer, VK_PIPELINE_BIND_POINT_GRAPHICS, pipeline_line); vkCmdPushConstants(commandBuffer, pipelineLayout_line, VK_SHADER_STAGE_VERTEX_BIT, 0, 24, &pushConstants_offscreen); vkCmdDraw(commandBuffer, 2, 1, 0, 0); renderPass_offscreen.CmdEnd(commandBuffer); /*采样到屏幕,待填充*/ commandBuffer.End(); graphicsBase::Base().SubmitCommandBuffer_Graphics(commandBuffer, semaphore_imageIsAvailable, semaphore_renderingIsOver, fence); graphicsBase::Base().PresentImage(semaphore_renderingIsOver); glfwPollEvents(); glfwGetCursorPos(pWindow, &mouseX, &mouseY); pushConstants_offscreen.offsets[index = !index] = { mouseX, mouseY }; clearCanvas = glfwGetMouseButton(pWindow, GLFW_MOUSE_BUTTON_LEFT) TitleFps(); fence.WaitAndReset(); } TerminateWindow(); return 0; }

采样到屏幕:开始渲染通道、绑定管线、绑定描述符、更新常量、绘制、结束渲染通道

renderPass_screen.CmdBegin(commandBuffer, framebuffers_screen[i], { {}, windowSize }, VkClearValue{ .color = { 1.f, 1.f, 1.f, 1.f } }); vkCmdBindPipeline(commandBuffer, VK_PIPELINE_BIND_POINT_GRAPHICS, pipeline_screen); //VkExtent2D底层是两个uint32_t,得转为float glm::vec2 windowSize = { ::windowSize.width, ::windowSize.height }; vkCmdPushConstants(commandBuffer, pipelineLayout_screen, VK_SHADER_STAGE_VERTEX_BIT, 0, 8, &windowSize); //pushConstants_offscreen.viewportSize就是canvasSize转到vec2类型 vkCmdPushConstants(commandBuffer, pipelineLayout_screen, VK_SHADER_STAGE_VERTEX_BIT | VK_SHADER_STAGE_FRAGMENT_BIT, 8, 8, &pushConstants_offscreen.viewportSize); vkCmdBindDescriptorSets(commandBuffer, VK_PIPELINE_BIND_POINT_GRAPHICS, pipelineLayout_screen, 0, 1, descriptorSet_texture.Address(), 0, nullptr); vkCmdDraw(commandBuffer, 4, 1, 0, 0); renderPass_screen.CmdEnd(commandBuffer);

这里需要注意的是,不同着色器阶段使用的push constant范围重叠时:

更新重叠的部分时要注明涉及的全部着色器阶段,而如果某个范围不会被某个阶段使用,更新该范围时不能注明无关的阶段(将只有某个阶段使用的范围,和重叠的范围分开更新即可)。

下面这种写法不符合规范(验证层会报错,虽然运行未必出错):

struct { glm::vec2 viewportSize; glm::vec2 canvasSize; } pushConstants = { { windowSize.width, windowSize.height }, { canvasSize.width, canvasSize.height } }; vkCmdPushConstants(commandBuffer, pipelineLayout_screen, VK_SHADER_STAGE_VERTEX_BIT | VK_SHADER_STAGE_FRAGMENT_BIT, 0, 16, &pushConstants);

运行程序,效果已经在前面书写着色器处展示过了。

。。。你以为事情这么一来就结束了吗?(似曾相识的台词)

写这节时遭遇的硬件差异问题

书写这节的示例代码的过程中我遇到了一个预料之外的问题。

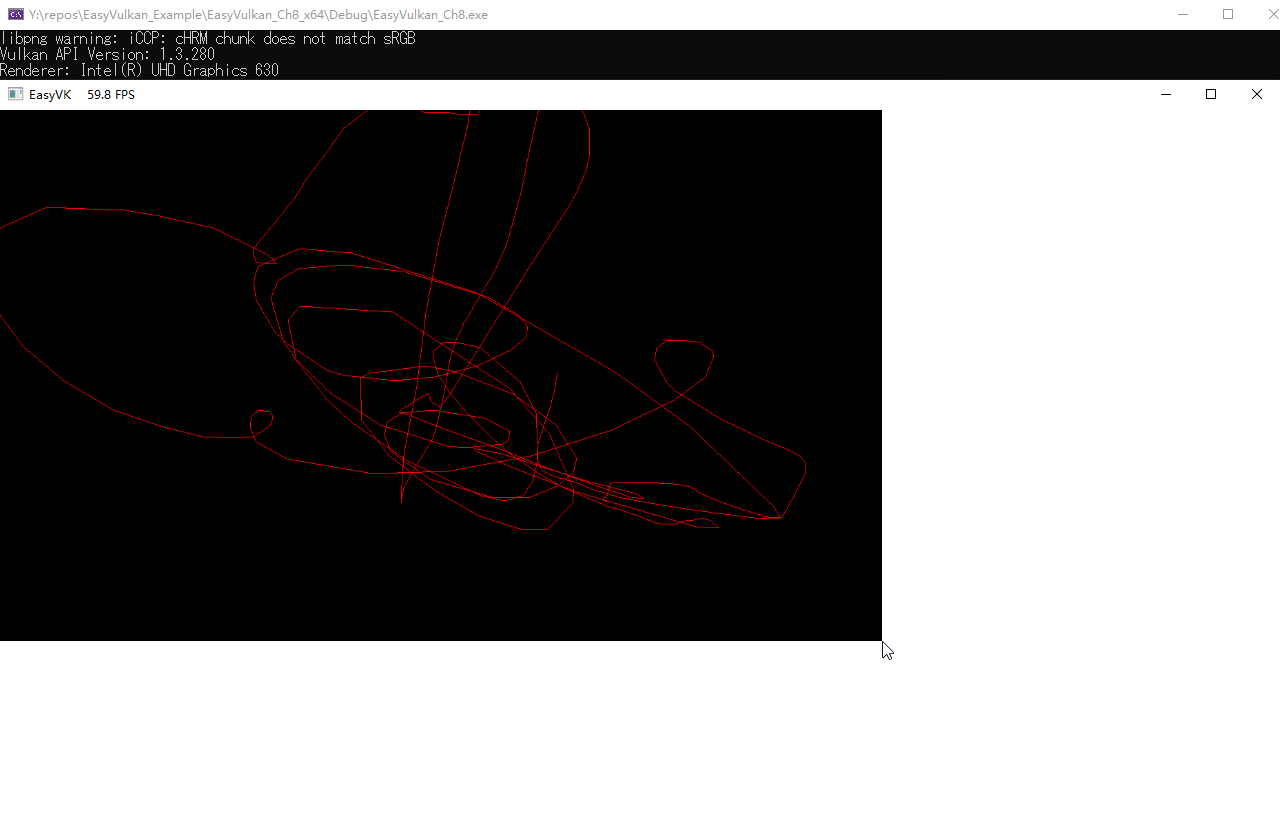

上面这些代码,一点不改(你可以直接拿示例代码Ch8-1.hpp跑跑看),如果你用Intel核显来跑(我的显卡型号在下图中),可能会有这种事:

-

这是个1280x720的窗口,周围白的也是窗口图像的一部分。

简而言之:在“渲染到屏幕”时,没有正确更新push constant中的canvasSize,在其位置上的数据似乎保留为了鼠标指针位置(Line.vert.shader中的offset[0])。至于为什么连蓝色和绿色的线都消失了?。。。我也不知道!

这个情况只在上述代码中canvasSize和交换链图像的大小windowSize一样时发生。

笔者的怀疑是:英特尔驱动可能有某种方式判断更新的数据是否跟先前的一致,然后决定要不要将更新记录在命令缓冲区,但代码实现有错误。

(我这个解释很可能不对,我以往用Intel核显跑代码时还遇到过一些push constant相关的类似问题,似乎无法以此来简单解释)

仅仅就当前这个情况,在完全符合规范的范畴内的改法,最省事的是这样子的:

struct { glm::vec2 viewportSize; glm::vec2 canvasSize; } pushConstants = { { windowSize.width, windowSize.height }, { canvasSize.width, canvasSize.height } }; vkCmdPushConstants(commandBuffer, pipelineLayout_screen, VK_SHADER_STAGE_VERTEX_BIT, 0, 16, &pushConstants); //对于我的两款显卡,有没有下面这行跑出来结果似乎都一样,但根据规范需要有 vkCmdPushConstants(commandBuffer, pipelineLayout_screen, VK_SHADER_STAGE_VERTEX_BIT | VK_SHADER_STAGE_FRAGMENT_BIT, 8, 8, &pushConstants.canvasSize);

-

你问为什么这么改就好了?因为实验结果告诉我能行!。。。

这样本该理应是发生了重复更新的,而且这只是这个情况下的做法,我不知道管线阶段更多或者push constant重叠范围更复杂时会怎么样及该怎么办。

也能这么解决:因为push constant的常量是记录在命令缓冲区中的,只要把“离屏渲染”和“渲染到屏幕”分别录制在不同的命令缓冲区中,前者的push constant就不可能影响到后者。

也能这么解决:不管某个值在某个阶段用不用得到,在所有着色器阶段中声明全部的push constant范围,然后总是一次性更新整个范围(做法是很保险,就是太简单粗暴了)。

还能这么解决:抛弃Vulkan,去用OpenGL!。。。

如果你遇到了类似的情况并且搞清楚了怎么回事的话,可以到Github issue里告诉我。

类似的问题在18年有人向英特尔反应过:Vulkan Push Constants doesn't work correctly on Windows Intel GPU drivers

(显然没有引起官方的重视)